Accelerating AI Powered Productivity with AI PCs

Read more

We compared model inference speed on Mac M1, M3 and Dell Ultra 9/Intel-powered laptops.

Find out why we are so excited - the result will (pleasantly) surprise you!

Discover how AI PCs are poised to decentralize AI workflows with their powerful capabilities by downloading our white paper.

We compared model inference speed on Mac M1, M3 and Dell Ultra 9/Intel-powered laptops.

Find out why we are so excited - the result will (pleasantly) surprise you!

Discover how AI PCs are poised to decentralize AI workflows with their powerful capabilities by downloading our white paper.

Accelerating AI powered productivity with AI PCs

Read more

Leading-Edge AI Updates from LLMWare

Leading-Edge AI Updates from LLMWare

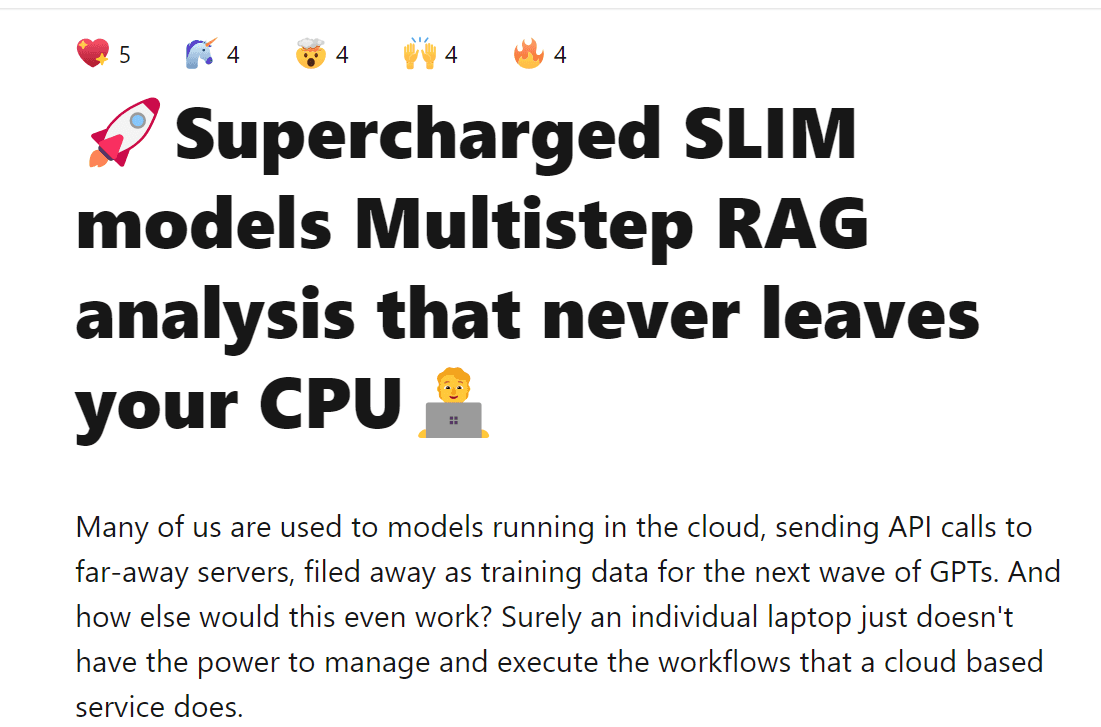

“I've been building upon the LLMWare project for the past 3 months. The ability to run these models locally on standard consumer CPUs, along with the abstraction provided to chop and change between models and different processes is really cool.

I think these SLIM models are the start of something powerful for automating internal business processes and enhancing the use case of LLMs. Still kinda blows my mind that this is all running on my 3900X and also runs on a bog standard Hetzner server with no GPU.” - User Review in Hacker News

It's time to join the thousands of developers and innovators on LLMWare.ai

It's time to join the thousands of developers and innovators on LLMWare.ai

It's time to join the thousands of developers and innovators on LLMWare.ai

It's time to join the thousands of developers and innovators on LLMWare.ai

Get Started

Learn More