Accelerating AI Powered Productivity with AI PCs

Read more

We compared model inference speed on Mac M1, M3 and Dell Ultra 9/Intel-powered laptops.

Find out why we are so excited - the result will (pleasantly) surprise you!

Discover how AI PCs are poised to decentralize AI workflows with their powerful capabilities by downloading our white paper.

Revolutionizing AI Deployment

Revolutionizing AI Deployment

Revolutionizing AI Deployment

Unleashing AI Acceleration with Intel's AI PCs and Model HQ by LLMWare.

The future of decentralized AI is here. Find out how Model HQ will enable easy and seamless lightweight GenAI apps deployment in the enterprise with AI PCs.

Revolutionizing

AI Deployment

Revolutionizing

AI Deployment

Revolutionizing

AI Deployment

Unleash AI Acceleration with Intel's AI PCs and Model HQ by LLMWare.

The future of decentralized AI is here. Find out how Model HQ will enable easy and seamless lightweight GenAI apps deployment in the enterprise with AI PCs.

We compared model inference speed on Mac M1, M3 and Dell Ultra 9/Intel-powered laptops.

Find out why we are so excited - the result will (pleasantly) surprise you!

Discover how AI PCs are poised to decentralize AI workflows with their powerful capabilities by downloading our white paper.

Accelerating AI powered productivity with AI PCs

Read more

Leading-Edge AI Updates from LLMWare

Leading-Edge AI Updates from LLMWare

“I've been building upon the LLMWare project for the past 3 months. The ability to run these models locally on standard consumer CPUs, along with the abstraction provided to chop and change between models and different processes is really cool.

I think these SLIM models are the start of something powerful for automating internal business processes and enhancing the use case of LLMs. Still kinda blows my mind that this is all running on my 3900X and also runs on a bog standard Hetzner server with no GPU.” - User Review in Hacker News

Model HQ Now Serving Arrow Lake

Read about our Partner Solution for Intel Arrow Lake

Read about our Partner Solution for Intel Arrow Lake

Learn More

Blogs

7 AI Open Source Libraries To Build RAG, Agents & AI Search

Saurabh Rai

11/14/2024

Towards a Control Framework for Small Language Model Deployment

Darren Oberst

11/03/2024

Hacktoberfest 2024: A Journey of Learning and Contribution

K Om Senapati

11/02/2024

Model Depot

Darren Oberst

10/28/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 2 - Embeddings

Julia Zhou

10/11/2024

11 Open Source Python Projects You Should Know in 2024 🧑💻🪄

Arindam Majumder

09/16/2024

Rag Simplified

Rohan Sharma

09/14/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 1 - Libraries

Julia Zhou

09/13/2024

Getting Work Done with GenAI: Just do the opposite — 10 contrarian rules that may actually work

Darren Oberst

09/02/2024

LLMware.ai 🤖: An Ultimate Python Toolkit for Building LLM Apps

Rohan Sharma

08/29/2024

Building the Most Accurate Small Language Models — Our Journey

Darren Oberst

08/26/2024

Best Small Language Models for Accuracy and Enterprise Use Cases — Benchmark Results

Darren Oberst

08/26/2024

Evaluating LLMs and Prompts with Electron UI

Will Taner

08/07/2024

Dueling AIs : Questioning and Answering with Language Models

Prashant Iyer

07/25/2024

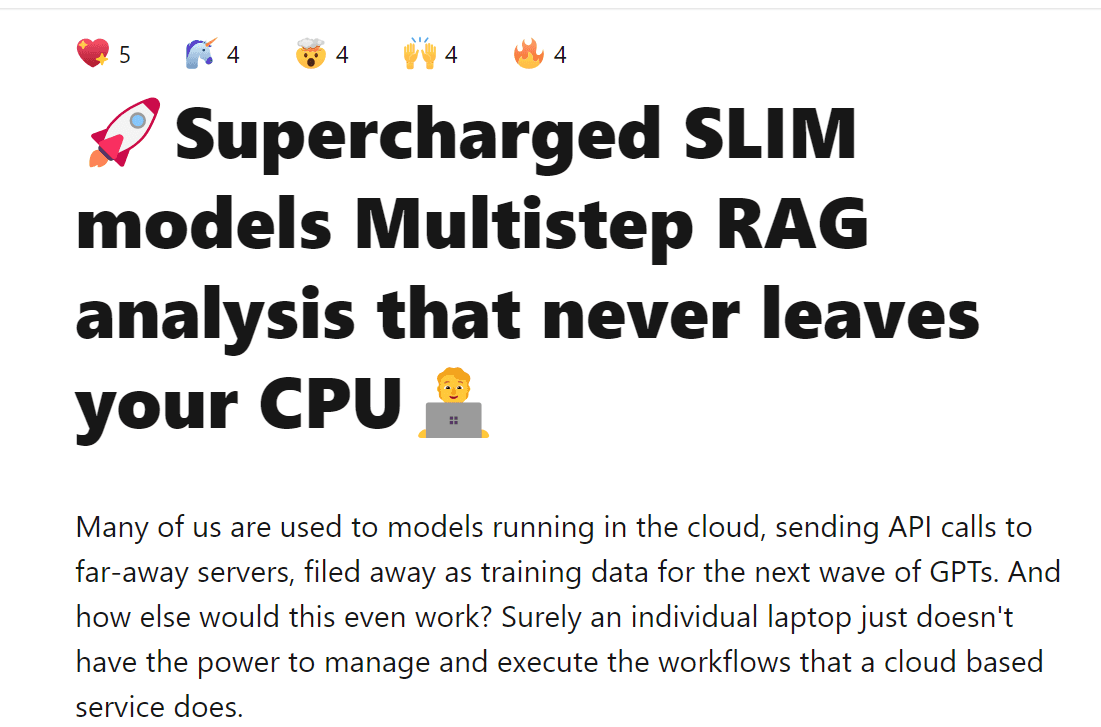

Supercharged SLIM models Multistep RAG analysis that never leaves your CPU

Simon Risman

07/02/2024

From Sound to Insights: Using AI🤖 for Audio File Transcription and Analysis!

Prashant Iyer

06/28/2024

AI-Powered Contract Queries: Use Language Models for Effective Analysis!

Prashant Iyer

06/28/2024

The Hardest Problem RAG... Handling 'NOT FOUND' Answers

Will Taner

06/24/2024

Are we prompting wrong? Balancing Creativity and Consistency in RAG.

Simon Risman

06/18/2024

Sentiment Analysis using CPU-Friendly Small Language Models

Will Taner

06/17/2024

Create Phi-3 Chatbot with 20 Lines of Code (Runs Without Wifi)

will Tanner

06/06/2024

SLIMs: small specialized models, function calling and multi-model agents

Darren Oberst

05/23/2024

2024 GitHub Accelerator: Meet the 11 projects shaping open source AI

Stormy Peters

05/23/2024

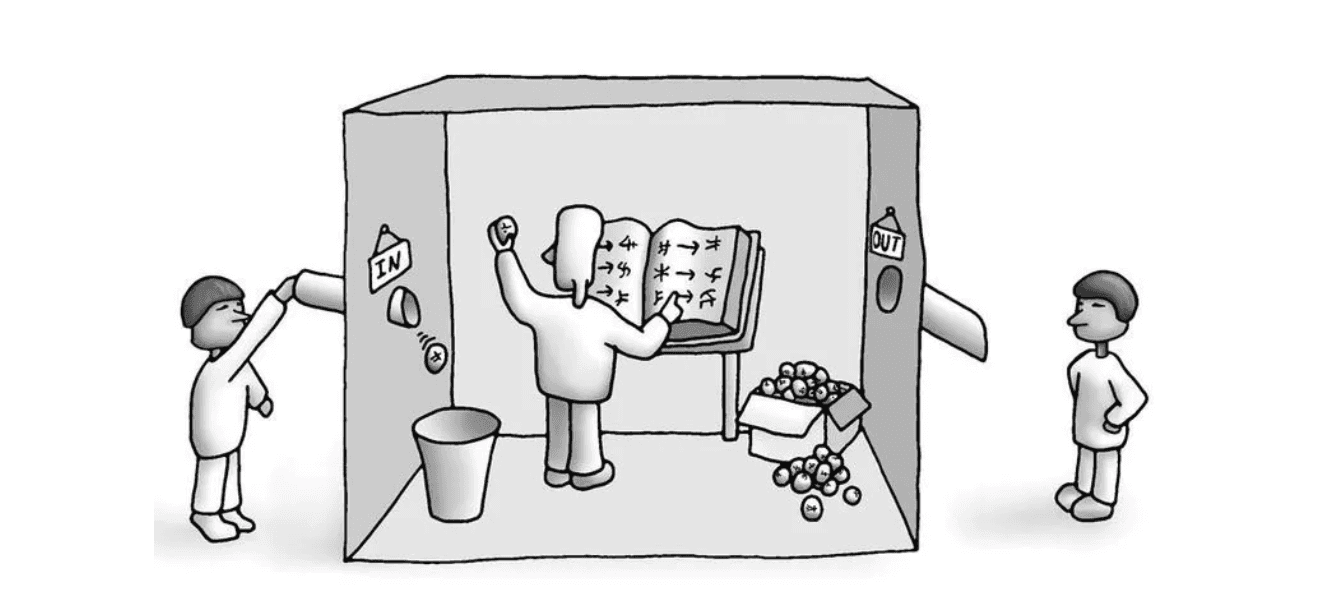

Thinking does not happen one token at a time…

Darren Oberst

05/14/2024

LLMWare.ai Selected for 2024 GitHub Accelerator: Enabling the Next Wave of Innovation in Enterprise RAG with Small Specialized Language Models

Asif Razzaq

05/23/2024

Function Calling LLMs: Combining Multiple Models for Better RAG Performancecom/function-calling-llms-combining-slims-and-dragon-for-better-rag-performance

Shanglun Wang

03/29/2024

How to Generate Structured Output with LLM?

Yang & Allahyari

03/28/2024

Supercharge Your Research Analysis with SLIM Models on CPU using LLMWare

toolify.ai

2/27/24

The Future of Financial AI: Stock Sentiment Analysis with SLIM Models

Yanli Liu

2/22/24

LLMWare Launches SLIMs: Small Specialized Function-Calling Models for Multi-Step Automation

Asif Razzaq

2/13/24

Go Open, go Lean: LLMWare now can boost your AI-powered enterprise.

Fabio Matricardi

2/12/24

LLMWare: The Swiss Army Knife for Building Enterprise-Grade LLM Applications

Amanatullah

2/12/24

Easy RAG and LLM tutorials to learn how to get started to become an "AI Developer" (Beginner Friendly)

Namee

1/30/24

Run Llama Without a GPU! Quantized LLM with LLMWare and Quantized Dragon by @shanglun

Shanglun Wang

1/7/24

LLMWare unified framework for developing LLM apps with RAG

Julian Horsey

1/4/24

Can an LLM Replace a FinTech Manager? Comprehensive Guide to Develop a GPU-Free AI Tool for Corporate Analysis

Gerasimos Plegas

12/19/23

6 Tips to Becoming a Master LLM Fine-tuning Chef

Darren Oberst

12/18/24

Implement Contextual Compression And Filtering In RAG Pipeline

Plaban Nayak

12/16/23

Production ready Open-Source LLMware are here!

Fabio Matricardi

12/12/23

Meet AI Bloks: An AI Startup Pioneering the First Out-of-the-Box, Easy-to-Use, Integrated Private Cloud Solution for LLMs in the Enterprise

Asif Razzaq

12/12/23

The Future Of AI Is Small And Specialized

Darren Oberst

11/29/23

LLMWare Launches RAG-Specialized 7B Parameter LLMs: Production-Grade Fine-Tuned Models for Enterprise Workflows Involving Complex Business Documents

Asif Razzaq

11/17/23

GPT-Like LLM With No GPU? Yes! Legislation Analysis With LLMWare

Shanglun Wang

11/10/23

RAG-Instruct Capabilities: “They Grow up So Fast”

Darren Oberst

11/7/23

How to Evaluate LLMs for RAG?

Darren Oberst

11/5/23

Innovating Enterprise AI: LLMWare Empowers LLM-Based Applications

multiplatform.ai

11/5/23

Open Source LLMs in RAG

Amanatullah

10/31/23

Techniques for Automated Source Citation Verification for RAG

Darren Oberst

10/16/23

Meet LLMWare: An All-in-One Artificial Intelligence Framework for Streamlining LLM-based Application Development for Generative AI Applications

Asif Razzaq

10/15/23

Evaluating LLM Performance in RAG Instruct Use Cases

Darren Oberst

10/15/23

Small Instruct-Following LLMs for RAG Use Case

Darren Oberst

07/03/2024

The Emerging LLM Stack for RAG

Darren Oberst

10/3/23

Are the Mega LLMs driving the future or they already in the past?

Darren Oberst

10/1/23

View More

7 AI Open Source Libraries To Build RAG, Agents & AI Search

Saurabh Rai

11/14/2024

Towards a Control Framework for Small Language Model Deployment

Darren Oberst

11/03/2024

Hacktoberfest 2024: A Journey of Learning and Contribution

K Om Senapati

11/02/2024

Model Depot

Darren Oberst

10/28/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 2 - Embeddings

Julia Zhou

10/11/2024

11 Open Source Python Projects You Should Know in 2024 🧑💻🪄

Arindam Majumder

09/16/2024

Rag Simplified

Rohan Sharma

09/14/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 1 - Libraries

Julia Zhou

09/13/2024

Getting Work Done with GenAI: Just do the opposite — 10 contrarian rules that may actually work

Darren Oberst

09/02/2024

LLMware.ai 🤖: An Ultimate Python Toolkit for Building LLM Apps

Rohan Sharma

08/29/2024

Building the Most Accurate Small Language Models — Our Journey

Darren Oberst

08/26/2024

Best Small Language Models for Accuracy and Enterprise Use Cases — Benchmark Results

Darren Oberst

08/26/2024

Evaluating LLMs and Prompts with Electron UI

Will Taner

08/07/2024

Dueling AIs : Questioning and Answering with Language Models

Prashant Iyer

07/25/2024

Supercharged SLIM models Multistep RAG analysis that never leaves your CPU

Simon Risman

07/02/2024

From Sound to Insights: Using AI🤖 for Audio File Transcription and Analysis!

Prashant Iyer

06/28/2024

AI-Powered Contract Queries: Use Language Models for Effective Analysis!

Prashant Iyer

06/28/2024

The Hardest Problem RAG... Handling 'NOT FOUND' Answers

Will Taner

06/24/2024

Are we prompting wrong? Balancing Creativity and Consistency in RAG.

Simon Risman

06/18/2024

Sentiment Analysis using CPU-Friendly Small Language Models

Will Taner

06/17/2024

Create Phi-3 Chatbot with 20 Lines of Code (Runs Without Wifi)

will Tanner

06/06/2024

SLIMs: small specialized models, function calling and multi-model agents

Darren Oberst

05/23/2024

2024 GitHub Accelerator: Meet the 11 projects shaping open source AI

Stormy Peters

05/23/2024

Thinking does not happen one token at a time…

Darren Oberst

05/14/2024

LLMWare.ai Selected for 2024 GitHub Accelerator: Enabling the Next Wave of Innovation in Enterprise RAG with Small Specialized Language Models

Asif Razzaq

05/23/2024

Function Calling LLMs: Combining Multiple Models for Better RAG Performancecom/function-calling-llms-combining-slims-and-dragon-for-better-rag-performance

Shanglun Wang

03/29/2024

How to Generate Structured Output with LLM?

Yang & Allahyari

03/28/2024

Supercharge Your Research Analysis with SLIM Models on CPU using LLMWare

toolify.ai

2/27/24

The Future of Financial AI: Stock Sentiment Analysis with SLIM Models

Yanli Liu

2/22/24

LLMWare Launches SLIMs: Small Specialized Function-Calling Models for Multi-Step Automation

Asif Razzaq

2/13/24

Go Open, go Lean: LLMWare now can boost your AI-powered enterprise.

Fabio Matricardi

2/12/24

LLMWare: The Swiss Army Knife for Building Enterprise-Grade LLM Applications

Amanatullah

2/12/24

Easy RAG and LLM tutorials to learn how to get started to become an "AI Developer" (Beginner Friendly)

Namee

1/30/24

Run Llama Without a GPU! Quantized LLM with LLMWare and Quantized Dragon by @shanglun

Shanglun Wang

1/7/24

LLMWare unified framework for developing LLM apps with RAG

Julian Horsey

1/4/24

Can an LLM Replace a FinTech Manager? Comprehensive Guide to Develop a GPU-Free AI Tool for Corporate Analysis

Gerasimos Plegas

12/19/23

6 Tips to Becoming a Master LLM Fine-tuning Chef

Darren Oberst

12/18/24

Implement Contextual Compression And Filtering In RAG Pipeline

Plaban Nayak

12/16/23

Production ready Open-Source LLMware are here!

Fabio Matricardi

12/12/23

Meet AI Bloks: An AI Startup Pioneering the First Out-of-the-Box, Easy-to-Use, Integrated Private Cloud Solution for LLMs in the Enterprise

Asif Razzaq

12/12/23

The Future Of AI Is Small And Specialized

Darren Oberst

11/29/23

LLMWare Launches RAG-Specialized 7B Parameter LLMs: Production-Grade Fine-Tuned Models for Enterprise Workflows Involving Complex Business Documents

Asif Razzaq

11/17/23

GPT-Like LLM With No GPU? Yes! Legislation Analysis With LLMWare

Shanglun Wang

11/10/23

RAG-Instruct Capabilities: “They Grow up So Fast”

Darren Oberst

11/7/23

How to Evaluate LLMs for RAG?

Darren Oberst

11/5/23

Innovating Enterprise AI: LLMWare Empowers LLM-Based Applications

multiplatform.ai

11/5/23

Open Source LLMs in RAG

Amanatullah

10/31/23

Techniques for Automated Source Citation Verification for RAG

Darren Oberst

10/16/23

Meet LLMWare: An All-in-One Artificial Intelligence Framework for Streamlining LLM-based Application Development for Generative AI Applications

Asif Razzaq

10/15/23

Evaluating LLM Performance in RAG Instruct Use Cases

Darren Oberst

10/15/23

Small Instruct-Following LLMs for RAG Use Case

Darren Oberst

07/03/2024

The Emerging LLM Stack for RAG

Darren Oberst

10/3/23

Are the Mega LLMs driving the future or they already in the past?

Darren Oberst

10/1/23

View More

7 AI Open Source Libraries To Build RAG, Agents & AI Search

Saurabh Rai

11/14/2024

Towards a Control Framework for Small Language Model Deployment

Darren Oberst

11/03/2024

Hacktoberfest 2024: A Journey of Learning and Contribution

K Om Senapati

11/02/2024

Model Depot

Darren Oberst

10/28/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 2 - Embeddings

Julia Zhou

10/11/2024

11 Open Source Python Projects You Should Know in 2024 🧑💻🪄

Arindam Majumder

09/16/2024

Rag Simplified

Rohan Sharma

09/14/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 1 - Libraries

Julia Zhou

09/13/2024

Getting Work Done with GenAI: Just do the opposite — 10 contrarian rules that may actually work

Darren Oberst

09/02/2024

LLMware.ai 🤖: An Ultimate Python Toolkit for Building LLM Apps

Rohan Sharma

08/29/2024

Building the Most Accurate Small Language Models — Our Journey

Darren Oberst

08/26/2024

Best Small Language Models for Accuracy and Enterprise Use Cases — Benchmark Results

Darren Oberst

08/26/2024

Evaluating LLMs and Prompts with Electron UI

Will Taner

08/07/2024

Dueling AIs : Questioning and Answering with Language Models

Prashant Iyer

07/25/2024

Supercharged SLIM models Multistep RAG analysis that never leaves your CPU

Simon Risman

07/02/2024

From Sound to Insights: Using AI🤖 for Audio File Transcription and Analysis!

Prashant Iyer

06/28/2024

AI-Powered Contract Queries: Use Language Models for Effective Analysis!

Prashant Iyer

06/28/2024

The Hardest Problem RAG... Handling 'NOT FOUND' Answers

Will Taner

06/24/2024

Are we prompting wrong? Balancing Creativity and Consistency in RAG.

Simon Risman

06/18/2024

Sentiment Analysis using CPU-Friendly Small Language Models

Will Taner

06/17/2024

Create Phi-3 Chatbot with 20 Lines of Code (Runs Without Wifi)

will Tanner

06/06/2024

SLIMs: small specialized models, function calling and multi-model agents

Darren Oberst

05/23/2024

2024 GitHub Accelerator: Meet the 11 projects shaping open source AI

Stormy Peters

05/23/2024

Thinking does not happen one token at a time…

Darren Oberst

05/14/2024

LLMWare.ai Selected for 2024 GitHub Accelerator: Enabling the Next Wave of Innovation in Enterprise RAG with Small Specialized Language Models

Asif Razzaq

05/23/2024

Function Calling LLMs: Combining Multiple Models for Better RAG Performancecom/function-calling-llms-combining-slims-and-dragon-for-better-rag-performance

Shanglun Wang

03/29/2024

How to Generate Structured Output with LLM?

Yang & Allahyari

03/28/2024

Supercharge Your Research Analysis with SLIM Models on CPU using LLMWare

toolify.ai

2/27/24

The Future of Financial AI: Stock Sentiment Analysis with SLIM Models

Yanli Liu

2/22/24

LLMWare Launches SLIMs: Small Specialized Function-Calling Models for Multi-Step Automation

Asif Razzaq

2/13/24

Go Open, go Lean: LLMWare now can boost your AI-powered enterprise.

Fabio Matricardi

2/12/24

LLMWare: The Swiss Army Knife for Building Enterprise-Grade LLM Applications

Amanatullah

2/12/24

Easy RAG and LLM tutorials to learn how to get started to become an "AI Developer" (Beginner Friendly)

Namee

1/30/24

Run Llama Without a GPU! Quantized LLM with LLMWare and Quantized Dragon by @shanglun

Shanglun Wang

1/7/24

LLMWare unified framework for developing LLM apps with RAG

Julian Horsey

1/4/24

Can an LLM Replace a FinTech Manager? Comprehensive Guide to Develop a GPU-Free AI Tool for Corporate Analysis

Gerasimos Plegas

12/19/23

6 Tips to Becoming a Master LLM Fine-tuning Chef

Darren Oberst

12/18/24

Implement Contextual Compression And Filtering In RAG Pipeline

Plaban Nayak

12/16/23

Production ready Open-Source LLMware are here!

Fabio Matricardi

12/12/23

Meet AI Bloks: An AI Startup Pioneering the First Out-of-the-Box, Easy-to-Use, Integrated Private Cloud Solution for LLMs in the Enterprise

Asif Razzaq

12/12/23

The Future Of AI Is Small And Specialized

Darren Oberst

11/29/23

LLMWare Launches RAG-Specialized 7B Parameter LLMs: Production-Grade Fine-Tuned Models for Enterprise Workflows Involving Complex Business Documents

Asif Razzaq

11/17/23

GPT-Like LLM With No GPU? Yes! Legislation Analysis With LLMWare

Shanglun Wang

11/10/23

RAG-Instruct Capabilities: “They Grow up So Fast”

Darren Oberst

11/7/23

How to Evaluate LLMs for RAG?

Darren Oberst

11/5/23

Innovating Enterprise AI: LLMWare Empowers LLM-Based Applications

multiplatform.ai

11/5/23

Open Source LLMs in RAG

Amanatullah

10/31/23

Techniques for Automated Source Citation Verification for RAG

Darren Oberst

10/16/23

Meet LLMWare: An All-in-One Artificial Intelligence Framework for Streamlining LLM-based Application Development for Generative AI Applications

Asif Razzaq

10/15/23

Evaluating LLM Performance in RAG Instruct Use Cases

Darren Oberst

10/15/23

Small Instruct-Following LLMs for RAG Use Case

Darren Oberst

07/03/2024

The Emerging LLM Stack for RAG

Darren Oberst

10/3/23

Are the Mega LLMs driving the future or they already in the past?

Darren Oberst

10/1/23

View More

Blogs

7 AI Open Source Libraries To Build RAG, Agents & AI Search

Saurabh Rai

11/14/2024

Towards a Control Framework for Small Language Model Deployment

Darren Oberst

11/03/2024

Hacktoberfest 2024: A Journey of Learning and Contribution

K Om Senapati

11/02/2024

Model Depot

Darren Oberst

10/28/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 2 - Embeddings

Julia Zhou

10/11/2024

11 Open Source Python Projects You Should Know in 2024 🧑💻🪄

Arindam Majumder

09/16/2024

Rag Simplified

Rohan Sharma

09/14/2024

How I Learned Generative AI in Two Weeks (and You Can Too): Part 1 - Libraries

Julia Zhou

09/13/2024

Getting Work Done with GenAI: Just do the opposite — 10 contrarian rules that may actually work

Darren Oberst

09/02/2024

LLMware.ai 🤖: An Ultimate Python Toolkit for Building LLM Apps

Rohan Sharma

08/29/2024

Building the Most Accurate Small Language Models — Our Journey

Darren Oberst

08/26/2024

Best Small Language Models for Accuracy and Enterprise Use Cases — Benchmark Results

Darren Oberst

08/26/2024

Evaluating LLMs and Prompts with Electron UI

Will Taner

08/07/2024

Dueling AIs : Questioning and Answering with Language Models

Prashant Iyer

07/25/2024

Supercharged SLIM models Multistep RAG analysis that never leaves your CPU

Simon Risman

07/02/2024

From Sound to Insights: Using AI🤖 for Audio File Transcription and Analysis!

Prashant Iyer

06/28/2024

AI-Powered Contract Queries: Use Language Models for Effective Analysis!

Prashant Iyer

06/28/2024

The Hardest Problem RAG... Handling 'NOT FOUND' Answers

Will Taner

06/24/2024

Are we prompting wrong? Balancing Creativity and Consistency in RAG.

Simon Risman

06/18/2024

Sentiment Analysis using CPU-Friendly Small Language Models

Will Taner

06/17/2024

Create Phi-3 Chatbot with 20 Lines of Code (Runs Without Wifi)

will Tanner

06/06/2024

SLIMs: small specialized models, function calling and multi-model agents

Darren Oberst

05/23/2024

2024 GitHub Accelerator: Meet the 11 projects shaping open source AI

Stormy Peters

05/23/2024

Thinking does not happen one token at a time…

Darren Oberst

05/14/2024

LLMWare.ai Selected for 2024 GitHub Accelerator: Enabling the Next Wave of Innovation in Enterprise RAG with Small Specialized Language Models

Asif Razzaq

05/23/2024

Function Calling LLMs: Combining Multiple Models for Better RAG Performancecom/function-calling-llms-combining-slims-and-dragon-for-better-rag-performance

Shanglun Wang

03/29/2024

How to Generate Structured Output with LLM?

Yang & Allahyari

03/28/2024

Supercharge Your Research Analysis with SLIM Models on CPU using LLMWare

toolify.ai

2/27/24

The Future of Financial AI: Stock Sentiment Analysis with SLIM Models

Yanli Liu

2/22/24

LLMWare Launches SLIMs: Small Specialized Function-Calling Models for Multi-Step Automation

Asif Razzaq

2/13/24

Go Open, go Lean: LLMWare now can boost your AI-powered enterprise.

Fabio Matricardi

2/12/24

LLMWare: The Swiss Army Knife for Building Enterprise-Grade LLM Applications

Amanatullah

2/12/24

Easy RAG and LLM tutorials to learn how to get started to become an "AI Developer" (Beginner Friendly)

Namee

1/30/24

Run Llama Without a GPU! Quantized LLM with LLMWare and Quantized Dragon by @shanglun

Shanglun Wang

1/7/24

LLMWare unified framework for developing LLM apps with RAG

Julian Horsey

1/4/24

Can an LLM Replace a FinTech Manager? Comprehensive Guide to Develop a GPU-Free AI Tool for Corporate Analysis

Gerasimos Plegas

12/19/23

6 Tips to Becoming a Master LLM Fine-tuning Chef

Darren Oberst

12/18/24

Implement Contextual Compression And Filtering In RAG Pipeline

Plaban Nayak

12/16/23

Production ready Open-Source LLMware are here!

Fabio Matricardi

12/12/23

Meet AI Bloks: An AI Startup Pioneering the First Out-of-the-Box, Easy-to-Use, Integrated Private Cloud Solution for LLMs in the Enterprise

Asif Razzaq

12/12/23

The Future Of AI Is Small And Specialized

Darren Oberst

11/29/23

LLMWare Launches RAG-Specialized 7B Parameter LLMs: Production-Grade Fine-Tuned Models for Enterprise Workflows Involving Complex Business Documents

Asif Razzaq

11/17/23

GPT-Like LLM With No GPU? Yes! Legislation Analysis With LLMWare

Shanglun Wang

11/10/23

RAG-Instruct Capabilities: “They Grow up So Fast”

Darren Oberst

11/7/23

How to Evaluate LLMs for RAG?

Darren Oberst

11/5/23

Innovating Enterprise AI: LLMWare Empowers LLM-Based Applications

multiplatform.ai

11/5/23

Open Source LLMs in RAG

Amanatullah

10/31/23

Techniques for Automated Source Citation Verification for RAG

Darren Oberst

10/16/23

Meet LLMWare: An All-in-One Artificial Intelligence Framework for Streamlining LLM-based Application Development for Generative AI Applications

Asif Razzaq

10/15/23

Evaluating LLM Performance in RAG Instruct Use Cases

Darren Oberst

10/15/23

Small Instruct-Following LLMs for RAG Use Case

Darren Oberst

07/03/2024

The Emerging LLM Stack for RAG

Darren Oberst

10/3/23

Are the Mega LLMs driving the future or they already in the past?

Darren Oberst

10/1/23

View More >>

YouTube

Check out our YouTube Channel about LLMWare!

View More >>

YouTube

Check out our YouTube Channel about LLMWare!

View More >>

YouTube

Check out our YouTube Channel about LLMWare!

View More >>

YouTube

Check out our YouTube Channel about LLMWare!

View More >>

It's time to join the thousands of developers and innovators on LLMWare.ai

It's time to join the thousands of developers and innovators on LLMWare.ai

It's time to join the thousands of developers and innovators on LLMWare.ai

It's time to join the thousands of developers and innovators on LLMWare.ai

Get Started

Learn More